Reinforcement Learning

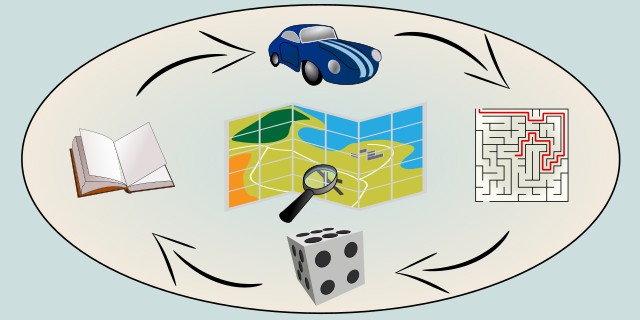

Reinforcement learning is a type of machine learning where an agent learns to make decisions by taking actions in an environment to maximize cumulative reward. It involves learning from the consequences of actions rather than from explicit instructions.

How should the agent learn to make decisions?

Should the agent learn purely from trial and error, or should it also learn a model of the environment to plan ahead?

Tips:

- If your agent should learn from direct interaction without building an internal model of the environment, choose Model-Free.

- If your agent should learn a model of how the environment behaves and use it to simulate and plan, choose Model-Based.

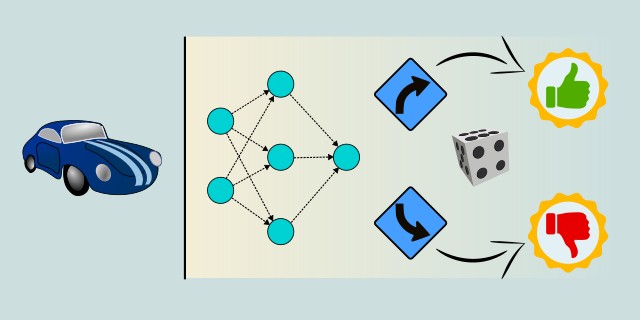

Model Free

Model-free reinforcement learning methods learn to make decisions based on the rewards received from the environment without explicitly modeling the environment's dynamics. They are particularly useful in complex environments where modeling is difficult or impossible.

Model-Free reinforcement learning methods learn optimal policies directly from interactions with the environment without requiring a model of the environment’s dynamics. These algorithms estimate the value of actions or state-action pairs to guide decision-making, using trial and error. Popular Model-Free approaches include Q-Learning and Deep Q-Networks (DQN). While simpler to implement, they may require large amounts of data and experience to converge to effective policies. Model-Free methods are widely used in scenarios where the environment is complex or unknown.

Use Case Examples:

- Game Playing: Training agents to play video games like Atari or Go without prior knowledge of the game mechanics.

- Robotics Control: Teaching robots to perform tasks through repeated interactions.

- Recommendation Systems: Optimizing recommendations by learning from user feedback over time.

- Autonomous Driving: Learning driving policies from real-world driving experience.

- Resource Management: Dynamic allocation of computing resources in data centers.

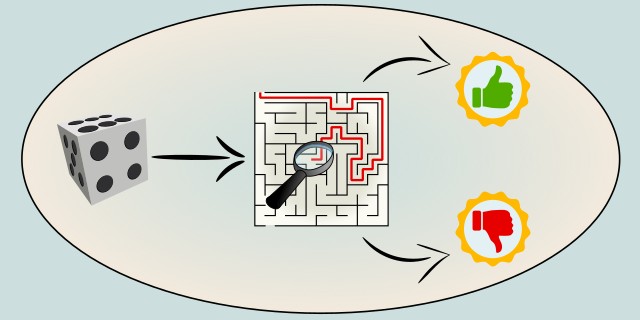

Model Based

Model-based reinforcement learning methods involve creating an internal model of the environment's dynamics, which allows for planning and decision-making based on predicted outcomes. These methods can significantly improve learning efficiency by enabling agents to simulate and evaluate potential actions before taking them.

Model-Based reinforcement learning methods build or use a model of the environment's dynamics to predict future states and rewards. This model allows the agent to simulate potential actions and plan ahead, leading to more sample-efficient learning compared to Model-Free methods. Algorithms like Dyna-Q combine both direct learning from interaction and planning using the learned model. Model-Based approaches can be more computationally intensive but often require fewer interactions with the environment to learn effective policies. They are especially useful when a reliable model of the environment is available or can be approximated.

Use Case Examples:

- Robotics Navigation: Planning robot paths by simulating possible future movements.

- Inventory Management: Optimizing stock levels by predicting future demand and supply.

- Autonomous Vehicles: Using predictive models to plan safe and efficient driving strategies.

- Healthcare Treatment Planning: Simulating patient responses to treatments for personalized medicine.

- Game AI: Creating opponents that anticipate player moves by modeling the game environment.

Feedback & Sharing

Give us your thoughts on this page, or share it with others who may find it useful.

Feedback

Found this helpful? Let me know what you think or suggest improvements 👉 Contact me.