Model Free

Model-free reinforcement learning methods learn to make decisions based on the rewards received from the environment without explicitly modeling the environment's dynamics. They are particularly useful in complex environments where modeling is difficult or impossible.

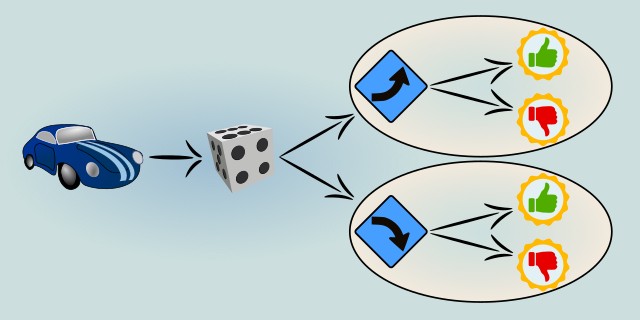

How complex is your environment and state space?

Can you represent the environment with a simple Q-table, or do you need neural networks to handle high-dimensional input?

Tips:

- If your environment has a small or discrete state-action space, choose Q-Learning.

- If you're working with a large or continuous state space (like images or sensor data), choose DQN (Deep Q-Network).

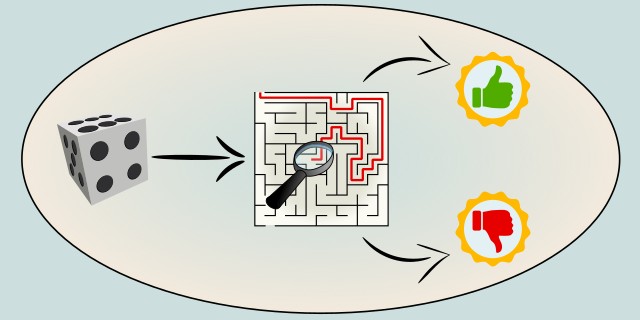

Q-Learning

Q-Learning is a model-free reinforcement learning algorithm that learns the value of actions in given states to find an optimal policy. It iteratively updates a Q-value table based on rewards received from interactions with the environment, without needing a model of the environment.

Q-Learning uses a simple update rule to improve its estimates of the best action to take in each state. It balances exploration and exploitation to learn the optimal policy over time. Being model-free, it directly learns from experience, making it versatile for many applications. However, it may require significant interactions to converge, especially in large or complex state spaces.

Use Case Examples:

- Robot Pathfinding: Learning optimal routes without prior knowledge of the environment.

- Game AI: Developing strategies in board games or video games through trial and error.

- Resource Allocation: Optimizing dynamic resource distribution in networks or systems.

- Autonomous Drones: Learning flight maneuvers through environmental interaction.

- Smart Grid Management: Balancing load and supply using adaptive policies.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🔴 High |

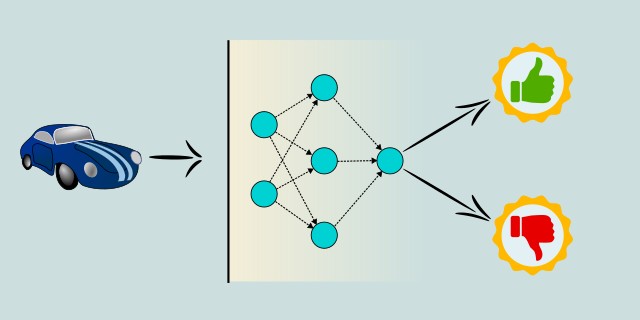

Deep Q-Network (DQN)

Deep Q-Network (DQN) is an advanced, model-free reinforcement learning algorithm that combines Q-Learning with deep neural networks. It uses a neural network to approximate the Q-value function, enabling it to handle large and complex state spaces where tabular Q-Learning is impractical.

DQN introduced key techniques such as experience replay and target networks to stabilize training. It allows agents to learn effective policies directly from high-dimensional sensory inputs like images. While powerful, DQN requires substantial computational resources and tuning to converge properly. It has been successfully applied in various domains, especially in gaming and robotics.

Use Case Examples:

- Atari Game Playing: Learning to play video games directly from raw pixels.

- Robotics Control: Teaching robots complex tasks in simulation before real-world deployment.

- Autonomous Driving: Decision-making based on sensory data in dynamic environments.

- Financial Trading: Learning trading strategies from market data.

- Smart Home Automation: Optimizing energy use and device control based on environment feedback.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🔴 High |

Feedback & Sharing

Give us your thoughts on this page, or share it with others who may find it useful.

Feedback

Found this helpful? Let me know what you think or suggest improvements 👉 Contact me.