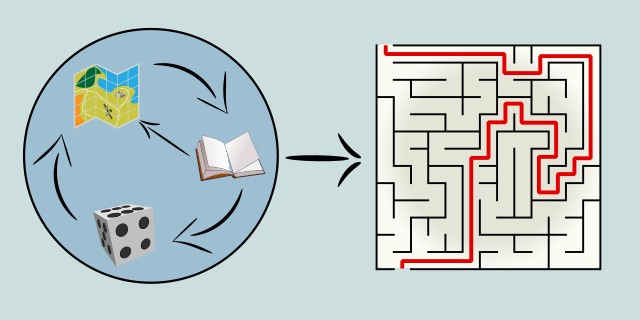

Model Based

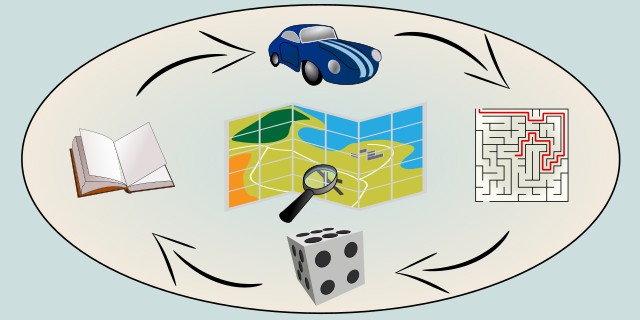

Model-based reinforcement learning methods involve creating an internal model of the environment's dynamics, which allows for planning and decision-making based on predicted outcomes. These methods can significantly improve learning efficiency by enabling agents to simulate and evaluate potential actions before taking them.

Are you able to model the environment's dynamics?

Do you want to build an internal model of the environment to plan ahead and improve learning efficiency?

Tips:

- If you can simulate or approximate how the environment behaves (state transitions and rewards), model-based learning is for you.

Feedback & Sharing

Give us your thoughts on this page, or share it with others who may find it useful.

Feedback

Found this helpful? Let me know what you think or suggest improvements 👉 Contact me.