Semi-Supervised Learning

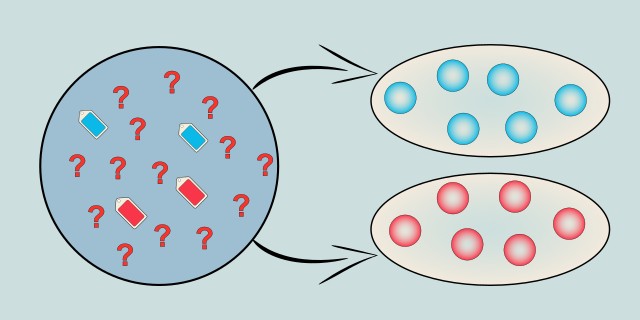

Semi-supervised learning is a blend of supervised and unsupervised learning, where a small amount of labeled data is used alongside a larger set of unlabeled data. This approach is particularly useful when acquiring labeled data is expensive or time-consuming, allowing models to learn from both types of data.

How should the model use the small amount of labeled data?

Do you want the labels to spread naturally through the data graph, or do you prefer an SVM-based approach that incorporates unlabeled data into the margin optimization?

Tips:

- If your data can be represented as a graph and you want labels to spread to similar neighbors, choose Label Propagation.

- If you prefer a margin-based model like Support Vector Machines that uses both labeled and unlabeled data, choose Semi-Supervised SVM.

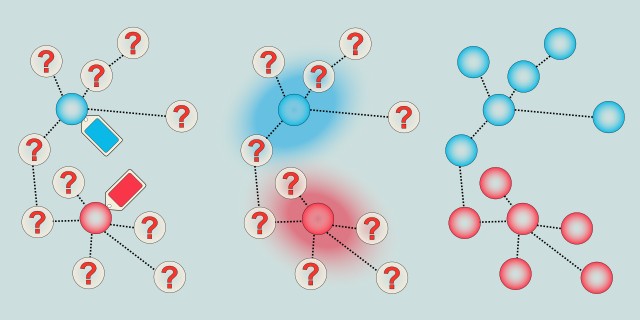

Label Propagation

Label Propagation is a graph-based semi-supervised learning algorithm that spreads labels from a small set of labeled data points to unlabeled points based on their similarity. It leverages the structure of the data by constructing a graph where nodes represent instances, and edges represent similarity, allowing labels to propagate through the network.

Label Propagation is effective when labeled data is scarce but unlabeled data is abundant. It assumes that similar instances are likely to share the same label, making it suitable for datasets with clear cluster structures. However, its performance depends heavily on the quality of the similarity graph and can be sensitive to noise and outliers.

Use Case Examples:

- Social Network Analysis: Inferring user interests or communities based on limited labeled profiles.

- Text Classification: Labeling documents when only a few are initially categorized.

- Image Recognition: Assigning labels to images in datasets with many unlabeled samples.

- Fraud Detection: Propagating known fraud labels to similar but unlabeled transactions.

- Biological Data: Classifying genes or proteins with limited annotated samples.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🟢 Low |

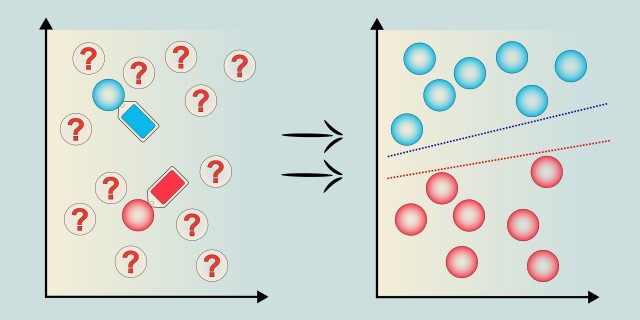

Semi-Supervised SVM

Semi-Supervised Support Vector Machine (Semi-Supervised SVM) extends the traditional SVM to leverage both labeled and unlabeled data during training. It aims to find a decision boundary that not only separates labeled examples but also respects the structure of the unlabeled data, improving classification performance when labeled data is limited.

Semi-Supervised SVM works well when labeled data is scarce but the unlabeled data distribution provides valuable information about the data structure. The algorithm tries to maximize the margin while avoiding placing the decision boundary in high-density regions of unlabeled data. Training can be computationally intensive due to the added complexity of incorporating unlabeled samples.

Use Case Examples:

- Text Categorization: Classifying documents with few labeled examples but many unlabeled texts.

- Medical Diagnosis: Using limited labeled patient data combined with abundant unlabeled records.

- Spam Filtering: Enhancing spam detection by using unlabeled emails alongside labeled ones.

- Speech Recognition: Improving models by incorporating unlabeled speech data.

- Image Classification: Leveraging unlabeled images to improve classification accuracy with few labeled samples.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🟡 Medium |

Feedback & Sharing

Give us your thoughts on this page, or share it with others who may find it useful.

Feedback

Found this helpful? Let me know what you think or suggest improvements 👉 Contact me.