Multi-Class Classification

Multi-class classification is a type of classification task where each instance can be assigned to one of three or more classes. This is different from binary classification, where each instance is assigned to one of two classes.

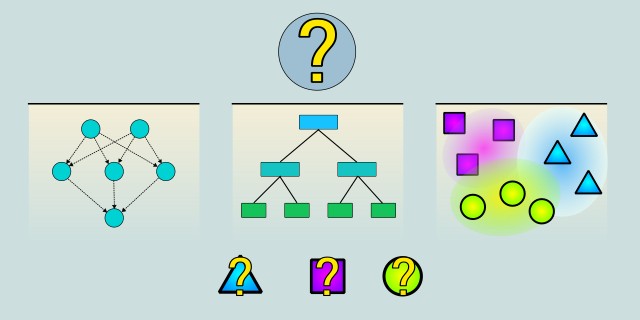

Which algorithm best fits your multi-class classification problem?

Do you need interpretability, simplicity, or the power of deep learning?

Tips:

- If you want a robust and accurate model with built-in feature importance, choose Random Forest.

- If you prefer a simple, instance-based model that doesn't require training, go with k-NN (k-Nearest Neighbors).

- If you have a large dataset and need to capture complex patterns, choose Neural Networks.

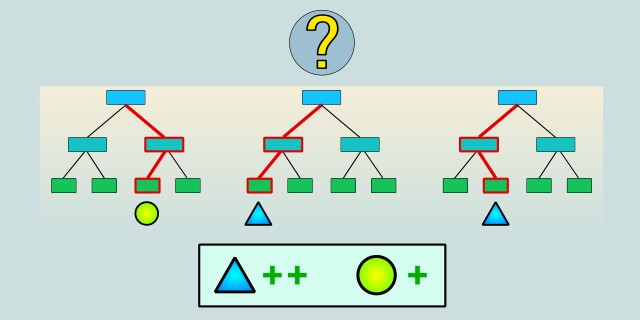

Random Forest

Random Forest is an ensemble learning method that builds multiple decision trees during training and outputs the mode of their predictions for classification tasks. It reduces overfitting and improves accuracy compared to a single decision tree by aggregating results from many trees.

Random Forest handles multi-class problems well and is robust to noisy data and outliers. It automatically estimates feature importance and is relatively easy to tune. However, training can be computationally intensive with very large datasets or many trees. It provides good performance without requiring extensive feature engineering.

Use Case Examples:

- Image Classification: Categorizing images into multiple classes such as animals or objects.

- Customer Segmentation: Grouping customers into categories based on purchasing behavior.

- Medical Diagnosis: Classifying diseases from patient symptom data.

- Credit Scoring: Assigning risk categories to loan applicants.

- Text Categorization: Classifying documents into topics or genres.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🟡 Medium |

k-Nearest Neighbors

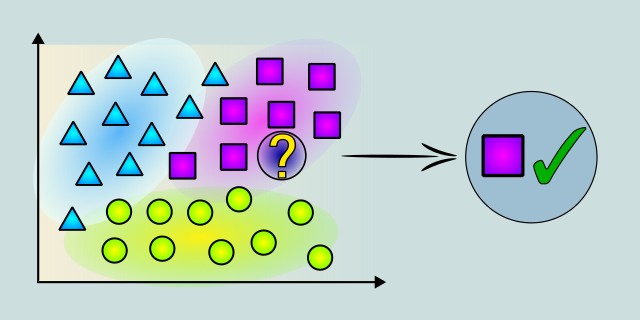

k-Nearest Neighbors (k-NN) is a simple, instance-based learning algorithm that classifies a data point based on the majority class among its k closest neighbors in the feature space. It does not require explicit training, making it easy to implement and understand.

k-NN is intuitive and effective for small to medium-sized datasets but can become computationally expensive as the dataset grows, due to the need to compute distances to all training points. It is sensitive to the choice of k and the distance metric. Feature scaling is important for optimal performance. It works well for multi-class classification with clear separation between classes.

Use Case Examples:

- Handwritten Digit Recognition: Classifying handwritten numbers based on pixel similarity.

- Recommender Systems: Suggesting products or content based on similar users or items.

- Customer Classification: Grouping customers by purchasing behavior for targeted marketing.

- Disease Diagnosis: Predicting disease categories based on patient symptom similarity.

- Image Recognition: Identifying objects in images by comparing with labeled examples.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🟢 Low |

Neural Networks

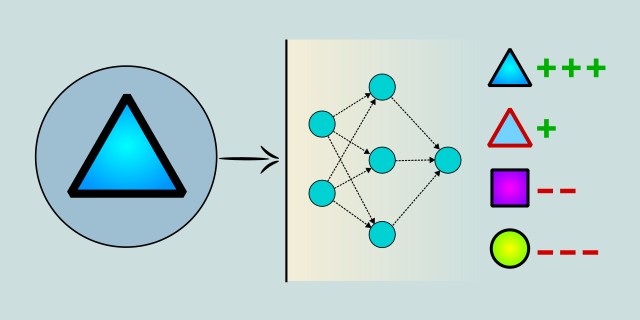

Neural Networks are a class of machine learning models inspired by the human brain’s structure. They consist of layers of interconnected nodes (neurons) that learn to recognize complex patterns in data. Neural networks are highly flexible and powerful for multi-class classification tasks.

Neural networks can model nonlinear and complex relationships between inputs and outputs. They require substantial training data and computational resources, especially deep architectures. Proper tuning of hyperparameters (like learning rate, number of layers, and neurons) is essential for good performance. Neural networks are widely used in image, speech, and text classification.

Use Case Examples:

- Image Classification: Identifying objects or animals in photographs.

- Speech Recognition: Classifying spoken words into text categories.

- Sentiment Analysis: Detecting sentiment in social media posts or reviews.

- Medical Diagnosis: Classifying diseases based on patient scans and symptoms.

- Handwritten Character Recognition: Recognizing handwritten letters or digits.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🔴 Large |

| Training Complexity | 🔴 High |

Feedback & Sharing

Give us your thoughts on this page, or share it with others who may find it useful.

Feedback

Found this helpful? Let me know what you think or suggest improvements 👉 Contact me.