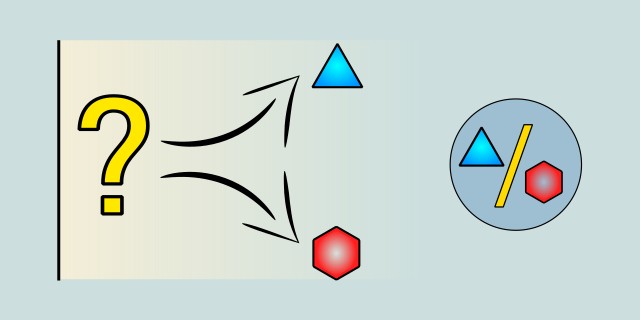

Binary Classification

Binary classification is a type of classification task where each instance in the dataset is assigned to one of two possible classes. This is a fundamental problem in machine learning and can be approached using various algorithms, each with its strengths and weaknesses.

Which binary classification algorithm suits your problem best?

Do you prioritize interpretability, flexibility, or handling complex boundaries?

Tips:

- If you need a simple, fast, and highly interpretable model, go with Logistic Regression.

- If your data is high-dimensional or has non-linear decision boundaries, choose SVM (Support Vector Machine).

- If you want a model that can capture complex rules and is easy to visualize, choose Decision Tree.

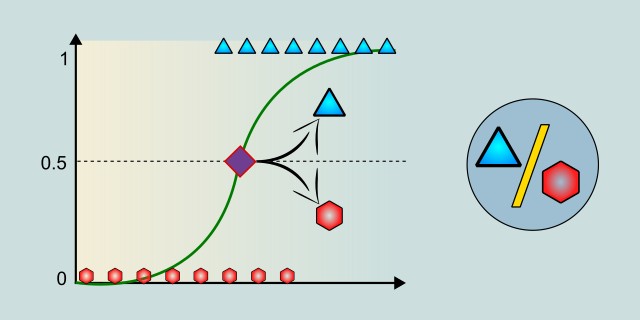

Logistic Regression

Logistic Regression is a statistical method used for binary classification problems. It models the probability that a given input belongs to a particular category by applying the logistic (sigmoid) function to a linear combination of input features.

Despite its name, logistic regression is a classification algorithm, not a regression one. It estimates the probability of a binary outcome (e.g., yes/no, true/false) and outputs a value between 0 and 1. It's widely used because of its simplicity, efficiency, and interpretability. Logistic regression works well when the relationship between the features and the outcome is approximately linear and the classes are linearly separable. It also provides coefficients that can be analyzed to understand the influence of each feature. However, it may underperform on complex, non-linear problems compared to more advanced models.

Use Case Examples:

- Email Spam Detection Classifying emails as spam or not based on content features.

- Customer Churn Prediction Predicting whether a customer will leave a service.

- Credit Risk Assessment Determining if a loan applicant is likely to default.

- Disease Diagnosis Predicting the presence or absence of a medical condition (e.g., diabetes).

- Marketing Response Modeling Estimating if a customer will respond to a campaign.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟡 Medium |

| Training Complexity | 🟢 Low |

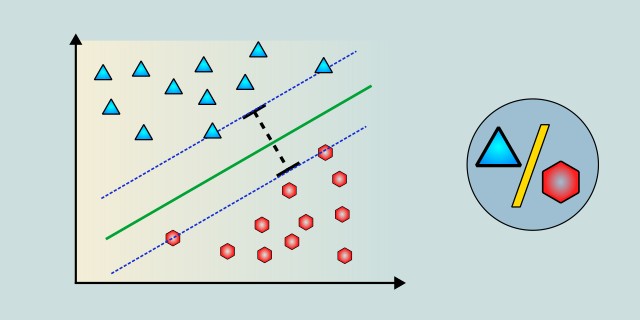

Support Vector Machine

Support Vector Machine (SVM) is a supervised learning algorithm primarily used for classification tasks, especially binary classification. It finds the optimal hyperplane that separates data points of different classes with the maximum margin.

SVM is powerful in high-dimensional spaces and is effective when the number of features exceeds the number of samples. It supports both linear and non-linear classification by using kernel functions (e.g., polynomial, RBF). SVMs are robust to overfitting, especially in high-dimensional settings, but they may struggle with noisy data or overlapping classes. Training can be computationally intensive for large datasets. Nonetheless, SVM is widely used in text classification, image recognition, and bioinformatics due to its strong theoretical foundations and solid performance.

Use Case Examples:

- Face Recognition Classifying whether a face matches a known individual.

- Text Categorization Sorting emails or articles into categories like spam/news/entertainment.

- Cancer Detection Classifying tumors as malignant or benign.

- Financial Fraud Detection Detecting unusual transaction patterns.

- Voice Recognition Distinguishing between different speakers.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟢 Small / 🟡 Medium |

| Training Complexity | 🔴 High |

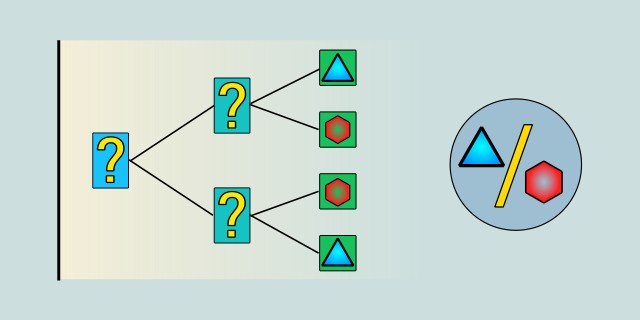

Decision Tree Classification

A Decision Tree is a supervised learning algorithm used for both classification and regression tasks. It splits the data into branches based on feature values, creating a tree-like structure where each node represents a decision rule and each leaf represents an outcome.

Decision Trees are intuitive, easy to interpret, and require little data preprocessing. They handle both numerical and categorical data well. However, they are prone to overfitting, especially with deep trees or noisy data. Techniques like pruning, setting depth limits, or using ensemble methods (like Random Forests) are often used to mitigate this. Decision Trees serve as a strong baseline model and are useful when explainability is important.

Use Case Examples:

- Loan Approval Deciding whether to approve or reject a loan based on applicant details.

- Medical Diagnosis Classifying patient conditions based on symptoms and test results.

- Customer Segmentation Categorizing customers by purchasing behavior.

- Credit Scoring Evaluating the risk level of loan applicants.

- Product Recommendation Suggesting products based on user preferences.

| Criterion | Recommendation |

|---|---|

| Dataset Size | 🟢 Small / 🟡 Medium / 🔴 Large |

| Training Complexity | 🟢 Low |

Feedback & Sharing

Give us your thoughts on this page, or share it with others who may find it useful.

Feedback

Found this helpful? Let me know what you think or suggest improvements 👉 Contact me.